We attended the Go Israel March 2022 Meetup. Here’s what we learned

A review of what the Israeli Golang community talks about in 2022, the fun things that happened in the meetup, and how it all relates to us at RecoLabs’ R&D.

With more and more companies using Go in production, innovating in Open Source, and pushing the language and libraries forward, the Go Israel community is growing stronger. So when Netanel Katzburg, a RecoLabs backend developer, suggested we attend the Go Israel meetup at JFrog TLV, it was a no-brainer. We went to learn more about Go, compare our experience with that of other companies in the space, and possibly find interesting collaborations and opportunities. With two interesting talks, some mingling, a raffle (which we won!), and even 🍕(with vegan options! 💚), what’s not to like? Nothing, TBH.

Single (threaded) and ready (dreaded) to mingle

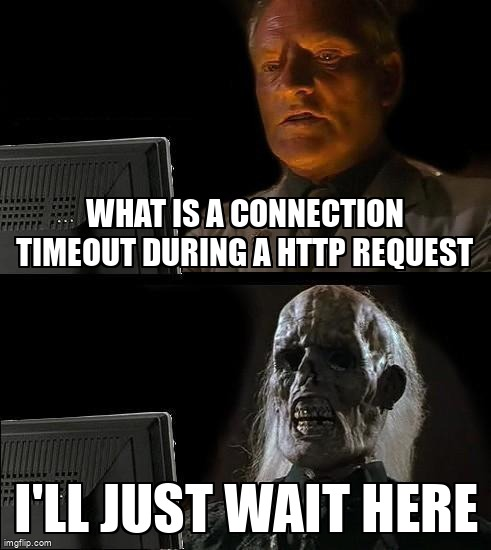

First of all; we met with the legendary Guy Brandwine! We want to take this opportunity and thank Guy for arranging this meetup (and for co-leading the Go Israel community). Thanks Guy! Please accept this meme as a gift.

While walking around the meetup venue (thanks JFrog, your offices are awesome!), we met with backend developers from Cypago to discuss interesting elements of using Go as part of their ETL process. We figured out we had some mutual connections from our professional past. It’s always fun to catch up!

The crowd was quite diverse: developers from companies similar to ours upskilling together; tech managers trying to catch up on the latest and greatest as they transition back into development after years in management; young developers looking for connections; and nice people from the JFrog HR department.

We won a raffle and donated our prize!

Before we get into the details of the talks, we'd like to highlight something very cool that happened at the meetup: towards the end, we won two Golang licences!...and since we obviously already had a few of them, we decided to donate the ones we won! We gave one to the excellent Women Who Go, who are working to establish a more diverse and inclusive Golang community, and one to the Golang Subreddit, which has helped us address Go issues on multiple occasions.

Alongside the many genuine and wholesome responses, we also got this absolute MVP response:

(don’t worry, we gave the licence to someone else).

Talk #1: Let’s Go NATS, by Guy Brandwine

You can find Guy’s presentation in this link and the example code in this GitHub Repository.

Intro to NATS, or why should we even listen?

Guy’s talk felt like the best walkthrough into a new technology we’ve ever seen - exactly what we’d like to find if we were to google it, but also super-guided and fun.

Guy started strongly by telling us exactly what we wanted to hear; what makes NATS different in a world overburdened by communication options, as developers. The answer is a single technology - which isn’t how things are usually done. Using a single technology for all the communication stack is not usually how architecture is built - normally the architecture emerges slowly and is therefore cobbled from many different technologies. NATS allows us to securely communicate across hybrid clouds, on-prem servers, edge devices, web apps, mobile and more. And, obviously, it’s written in Go!

The business card, which is one of the first things one should check when considering new tech for the stack, is also impressive. If a project is small, abandoned, deprecated, or governed by a weak organization, you wouldn't want to build your own business/tech stack on top of it. NATS is incubating in CNCF, alongside Argo, gRPC, OpenTelemetry, and other impressive projects. With more than 10K GitHub stars and users in major corporations such as netlify, Baidu, Alibaba, and more, it definitely has serious adoption as well.

The demonstration, or: you had my curiosity; Now you have my attention

Guy, like all good programmers, wanted to get to the code ASAP! So he went into a combination of live demo and presentation that went over both messaging patterns that NATS supports:

- Pub/sub, as one would use in an RSS or group chat.

- Client/server, as one would use in a queue with multiple workers or a µService architecture with load balancers and service discovery.

As is the case usually with Go, the demo code was super straightforward and easy to understand. Instead of repeating the examples in their entirety here (since you can find them in the presentation and the GitHub repo), we’ll list some of the highlights.

Deployment options and Docker size

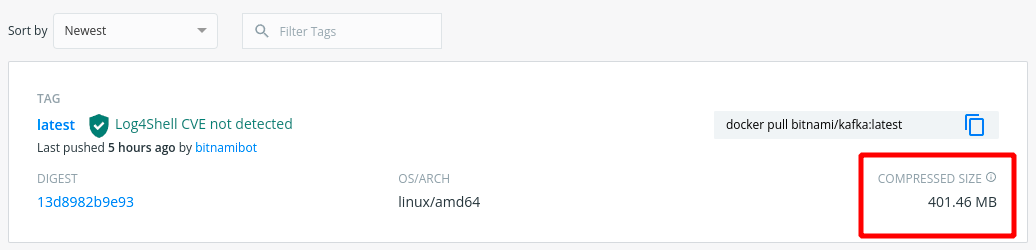

The Docker image size for NATS is tiny relative to Apache Kafka, for example. According to NATS documentation, “The NATS server Docker image is extremely lightweight, coming in under 10 MB in size”, whereas the latest image for Kafka on Bitnami clocks in at a whopping 400MB!

Guy also mentioned that it's fairly simple to run from the source, which has a few advantages: it's easier to experiment with different configurations, and it may be a little bit faster - we couldn't ask why at the time, but it appears that you can get up to a 10% performance boost by running it directly on your own architecture, because there are some specific CPU optimizations that can be done.

Questions about the pub/sub pattern

During the presentation, Netanel from our team asked if NATS is coming to replace gRPC. Guy responded by saying “This is a really big question! I'll get to comparisons later. And ask yourself this; does gRPC come to replace HTTP?”.

As Guy walked through the presentation, he did a captivating job of slowly building the example, with pauses like "this is what I added to the publisher”, “this is what I added to the subscriber". Doing this step-by-step with a real demo was an expert and fearless way to teach the NATS way of doing things.

As Guy demonstrated how he can send 10 million messages in 5 seconds 🤯, Tamir from our team asked "Who deals with the buffering?". Guy answered that NATS is a server, and there’s not a lot of client side buffering. The Δ between the publisher's time of pushing the message and the subscribers’ time of reading it was very small. Guy said that NATS isn’t about a ton of buffering - just raw performance. He attributed a lot of the performance improvements to NATS being a TCP-based protocol as opposed to an HTTP-based protocol.

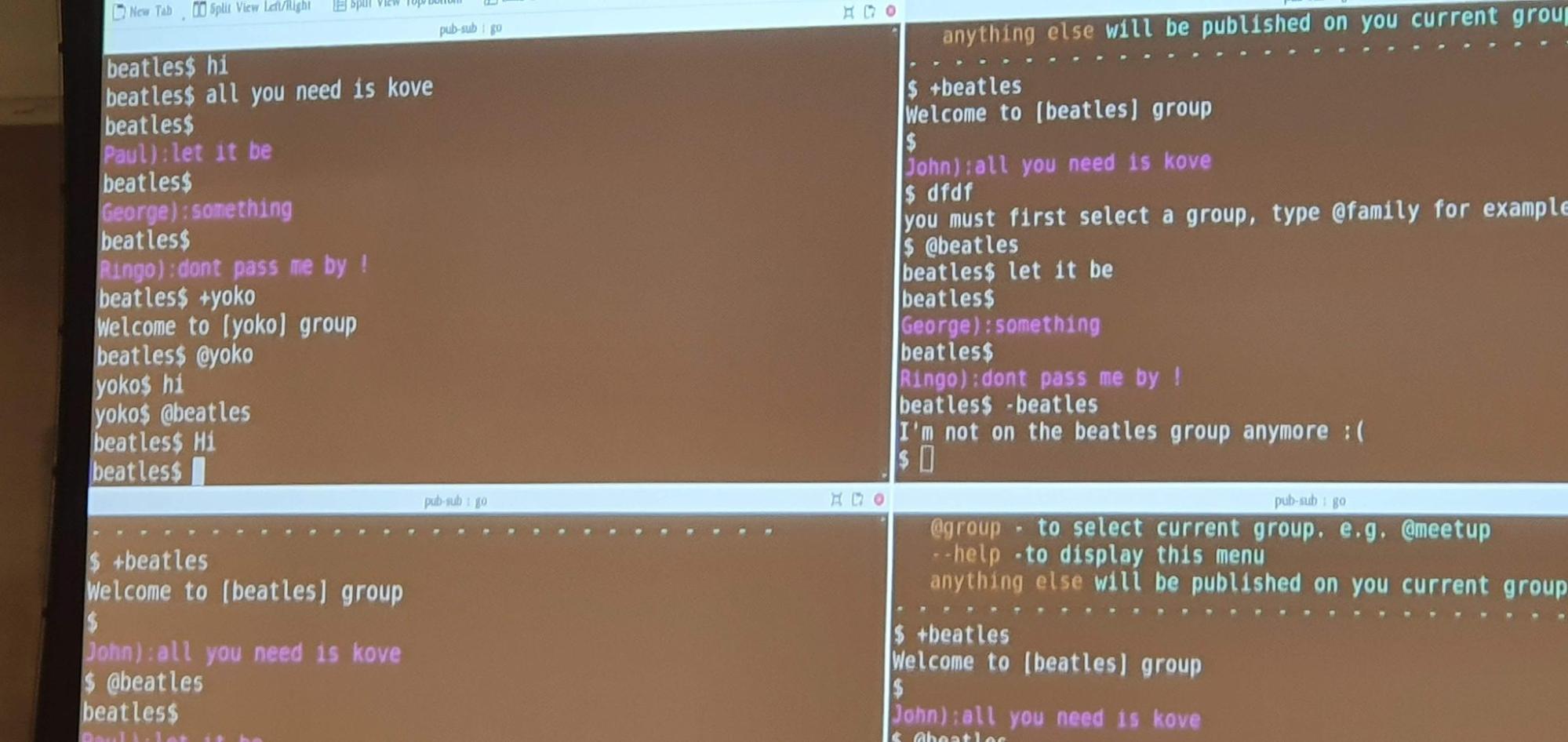

Final part of pub/sub - all you need is love

To close the pub/sub example, Guy showed a full group chat application. Very relatable, his reasoning to develop more was "My fingers were tingling".

The subscribe method had an interesting pattern here - a callback that manages the console print according to the message. In the example presented, it felt "toy"-ish, but having this implemented so simply indicates a common design pattern - a data sink management pattern for subscribers that gets data from multiple publishers.

func subscribe(nc *nats.Conn, subj string, printMsg func([]byte, string)) (*nats.Subscription, error) {

//this is not concurrency safe nor checks if subscription with similar name already exist !

if sub, err := nc.Subscribe(subj, func(msg *nats.Msg) {

printMsg(msg.Data, msg.Subject)

}); err != nil {

return nil, err

} else {

nc.Flush()

if err := nc.LastError(); err != nil {

log.Fatalf("subscribe error:%s", err.Error())

}

fmt.Printf("Welcome to [%s] group\n", subj)

return sub, nil

}

}A really strong claim from Guy was that "The UI is lacking, but I'm confident this system is already ready from a scale perspective for millions of users". An incredible statement for a 10mb Docker server that runs on Guy's machine!

The Request/Response example

As expected, the Request/Response pattern has different rules to pub/sub, as it solves different problems: as mentioned, a queue with multiple workers, or µServices with discovery. The code itself was neatly similar to the pub/sub example, with a timeout-able listenAndServe' pattern.

Since the example has the exact same pattern as before, it was reliably easy to follow. Another shoutout to Guy that knows how to build lectures! When you repeat patterns, it makes the audience feel super smart.

As a focus point this time, Guy talked a lot about performance - we added some real async examples, with sync.WaitGroups. Now we're cooking with asynchronous ⛽️

Can I use this?! NATS vs HTTP vs gRPC.

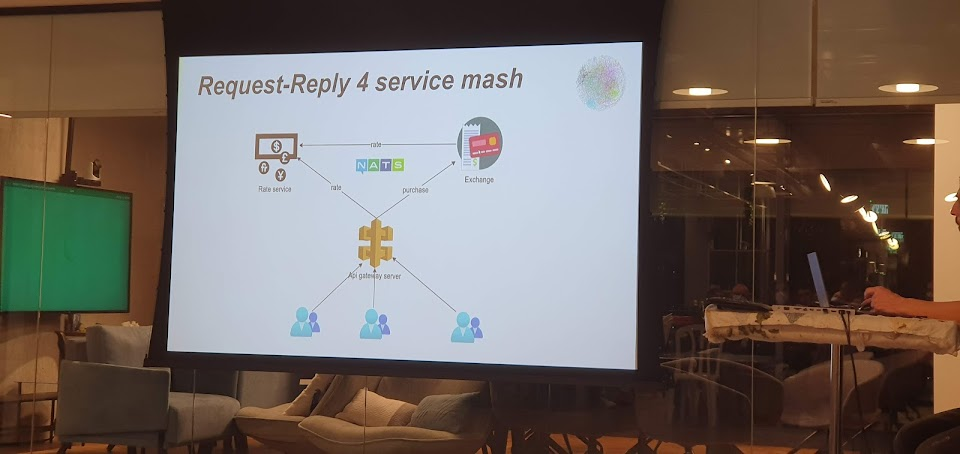

Example use case

In the final part of the talk, Guy tried to explain where one would perhaps use this technology. One use case he mentioned was a Service Mesh with NATS request/response pattern. If we use an API gateway, each service will subscribe to a subject (with multiple services subscribing to the same one being A-OK). He gave a real-life example of a crypto exchange, with NATSHandlers instead of HTTP handlers for the different APIs that reside in different services. Sometimes the API is a simple proxy, but one can also do real logic in it (Backend-for-Frontend).

Comparison selling points

There are a few main selling points when comparing to gRPC:

- Simplicity of a single NATS server instead of a complicated service discovery mechanism. This helps with many things, including debuggability.

- Security and authentication and easy OOTB, whereas in gRPC one usually needs to use various tools and often write code.

- Built-in observability (tracing and metrics). The downside is that other tooling built on top of this will probably work with more adopted tech first (gRPC/HTTP).

- The performance seems impressive. In a request/response demo, HTTP took 1 second, gRPC took 600ms and NATS took 300ms.

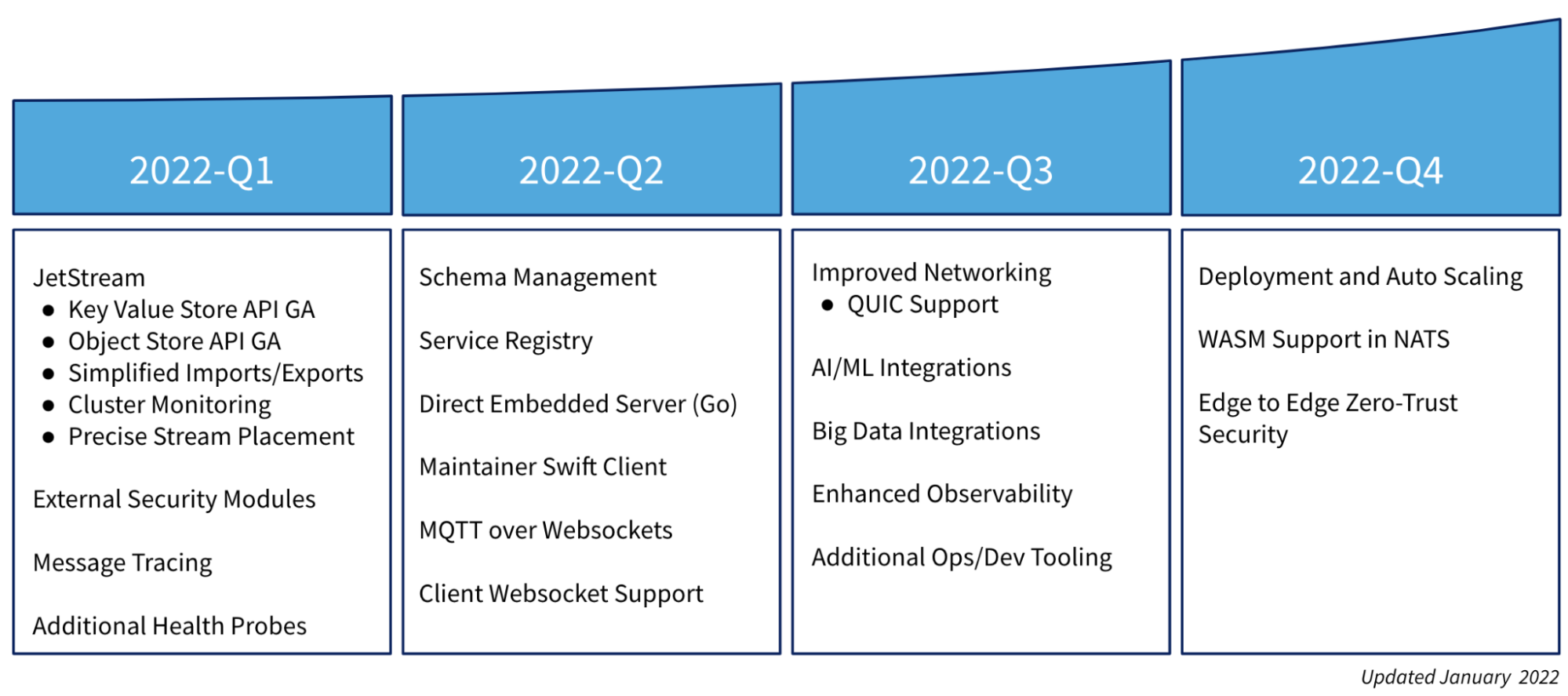

There are extra features: JetStream for persistency, web sockets (coming soon), FAQ is great (nice to think of documentation as a feature). NATS has a very ambitious roadmap.

Final questions

With all this new information, we definitely had some more questions.

Q: What are the development costs of using raw NATS, compared to autogenerated typed clients that gRPC offers?

A: Guy told us there's a lib above NATS, but it's a middleware in the service. NATS-over-gRPC - but the native transports are byte array. Another consideration Guy told us about was that developer time isn’t only about typing: the ability to give a local environment that fully simulates a complicated cloud environment is super important. One can get a local experience that’s comparable with the full cloud environment, with sidecars plugins over K8s for capabilities that are specific to the cloud.

Q: What is NATS’s relationship with K8s?

A: One can run NATS with Keda, but Guy explained that there aren't any smart integrations with K8s that he found, because he wasn’t looking; he didn't need them, as the simplicity and performance of NATS meant he didn’t have to.

Talk #2: From Zero to GraphQL hero, by Yoni Davidson

You can find Yoni’s presentation in this link and the example code in this GitHub Repository.

After a short break we re-convened for the second talk - this time by Yoni Davidon of ariga.io, who are building an operational data graph. They are an open-core company (like Confluent and Elastic).

Yoni started with a high-level review of GraphQL, and then introduced us to Ent including a live demo.

Yoni claimed, "I'll convince you that you need to think about GraphQL as a tech, and then I'll convince you that you want to use Ent to evaluate it". His main point was that no matter what your field is, you usually deal with a model application, and that GraphQL is a great language for that.

Yoni compared GraphQL mostly with REST. Where's gRPC? No love for gRPC apparently 💔

There was one funny moment when Yoni was reviewing the spec. In GraphQL’s type definition you use the "!" character. We asked what it meant, and even a veteran like Yoni was confused: his exact answer was “Oh, it means Optional. No! Required! Or not? Yes! No!” And the crowd went into discussion.

This was followed by another amusing moment, as Yoni started praying to the demo gods that the demo will work and he won’t have to go to his backup in the slide deck. Luckily, they were on his side.

After reviewing the spec, Yoni demonstrated how Ent works, and showed that we don't really want to write a ton of JOINs in each GraphQL resolver, so Ent does that for us. It’s an ORM with "Automatic GraphQL”, which allows for graph modelling on any database - which means that graph databases are not required anymore.

A note to JetBrains: Yoni used vscode to read the code (since it’s easy to increase the font size with), and Goland to write and execute it, which according to him, he did to “not anger the demo gods”. JetBrains really need to just support "increase font size easily" OOTB - it’s ridiculous that this super simple and intuitive feature is so broken in IntelliJ!

Question time

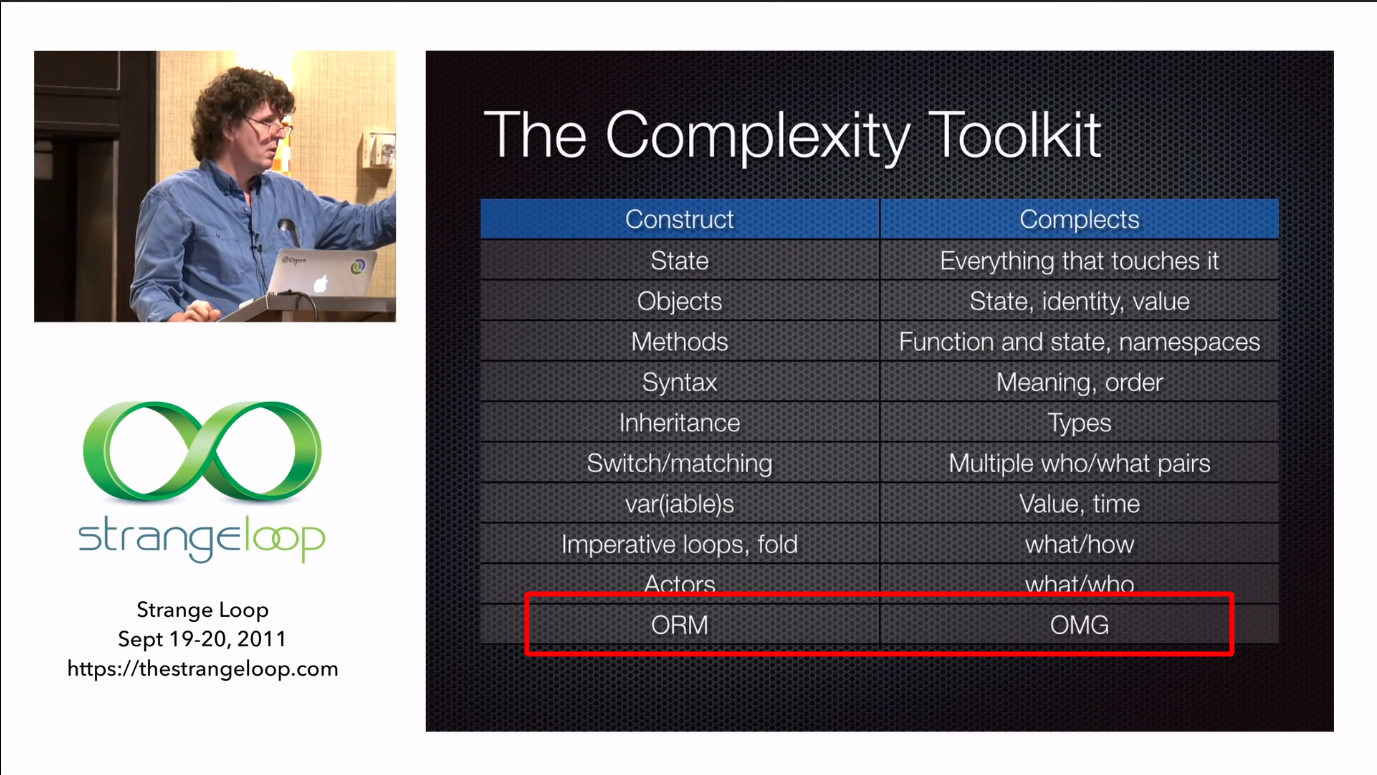

In "Simple made easy", Rich Hickey said that in the end, SQL is simpler than ORMs.

Our question was based on the fact that in RecoLabs we use a deadpan simple ORM with a lot of our declarative data operation being done in SQL or very simple Go. We asked how Ent compares to that, and Yoni said that Ent guides you into best practices, but it has a lot of “escape hatches”. We tried to understand if Ariga only maintains the project, but Yoni said that they’re completely dogfooding it - all of their services are on it. So we asked how often do Ariga use the escape hatches. Interestingly, Yoni said he can develop his escape hatches into Ent if he needs them; however the project has a lot of mileage, and since the main value proposition is that a large group of developers can develop safely, the escape hatches shouldn’t be used normally.

Then Nir Barak from the team asked how Ent does database migrations. Yoni explained that Ent supports automigrate. Nir asked if that wasn’t a bit scary for production environments, and Tomer who was in the audience and also works for Ariga chimed in and let us know that indeed; for production, Ent has an offline mode and by default it’s append only anyways.

We also inquired about validation rules - for example, a string being an email address, and about security rules. Yoni said that Ent believes that one should lower as much business logic (for example, validation) down to the schema level; So next to the schema there are validation rules, and Ent has privacy built into the schema level, as well.

Summary

Overall, it was a great meetup. We were somewhat frustrated - and sadly, not surprised - that the crowd was dominated by men. Getting involved with Women Who Go is definitely one of our action items from the conference. But we did meet interesting people, learn about cool technologies, ate pizza, won prizes, and even got some CVs of people that wanted to join us after meeting us.

As we aim to keep evolving as part of our engineering culture, expect to see us at the next meetups as well; perhaps this time we'll be the ones standing behind the podium!