ABCDEFG - Fun with AWS ALB

A funny issue we encountered while implementing load balancing with AWS.

The other day, as we were building the infrastructure to go online with our product (a SaaS web-based solution for business logic observability), a funny thing happened.

SOME BACKGROUND ABOUT THE INCIDENT

As the startup mindset dictates, aiming for quick product delivery, up until a few weeks ago, we developed our product but did not go public with it. Focusing on our customers' success, as well as waiting for a round of pen testing to be completed (so we know we're good in terms of security), our customers accessed our product using a VPN. Once we knew it was mature and safe enough to go online, we took the necessary steps to move forward: Adding a WAF, preventing domain enumeration, and enabling internal and external ingresses.

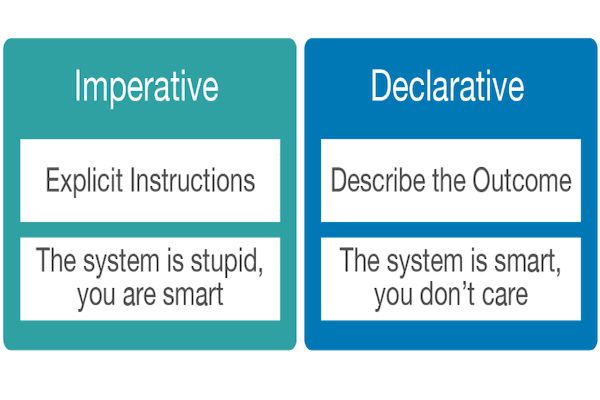

We'd been using NGINX Ingress Controller to control the ingresses for our EKS up until that point, but making it do all of the above declaratively was a challenge. We needed to find a different route!

100% of RecoLabs' structure is declarative, which reduces mutability, minimizes side effects, produces more understandable code, and makes it more scalable and repeatable.

ENTER AWS ALB!

The ALB controller (deployed with helm) listens for changes in ingresses (using annotations) and adjusts the load balancing policy as needed. We've set up two groups of ALBs per cluster, one internal and one external, as well as the certificates and profiles that go with them. It worked! Yay!

Internal Ingress:

❯ k describe ingress reco-ui

Name: reco-ui

Namespace: acme

Address: k8s-preprodinternetfa-****.eu-central-1.elb.amazonaws.com

Rules:

Host Path Backends

---- ---- --------

acme.preprod.recolabs.ai

/ reco-ui:80 (x.x.x.x:80)

Annotations: alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:eu-central-1:****:certificate/****

alb.ingress.kubernetes.io/group.name: preprod-internet-facing

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/ssl-policy: ELBSecurityPolicy-TLS-1-2-Ext-2018-06

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/wafv2-acl-arn:

arn:aws:wafv2:eu-central-1:****:regional/webacl/waf-preprod/****

external-dns.alpha.kubernetes.io/hostname: *.preprod.recolabs.ai

external-dns.alpha.kubernetes.io/ingress-hostname-source: annotation-only

kubernetes.io/ingress.class: alb

meta.helm.sh/release-name: reco-env

meta.helm.sh/release-namespace: acmeInternet Ingress:

❯ k describe ingress jupyter-hub-acme

Name: jupyter-hub-acme

Namespace: acme

Address: internal-k8s-preprodinternal-****.eu-central-1.elb.amazonaws.com

Rules:

Host Path Backends

---- ---- --------

acme.jhub.preprod.recolabs.ai

/ jupyter-hub-acme-proxy-public:http (x.x.x.x:8000)

Annotations: alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:eu-central-1:****:certificate/****

alb.ingress.kubernetes.io/group.name: preprod-internal

alb.ingress.kubernetes.io/scheme: internal

alb.ingress.kubernetes.io/ssl-policy: ELBSecurityPolicy-TLS-1-2-Ext-2018-06

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/wafv2-acl-arn:

arn:aws:wafv2:eu-central-1:****:regional/webacl/waf-preprod/****

external-dns.alpha.kubernetes.io/hostname: *.jhub.preprod.recolabs.ai

external-dns.alpha.kubernetes.io/ingress-hostname-source: annotation-only

kubernetes.io/ingress.class: alb

meta.helm.sh/release-name: jupyter-hub

meta.helm.sh/release-namespace: acmIn RecoLabs, each developer gets their own isolated environment consisting of all required AWS resources (S3, RDS, Neptune) and a namespace in our dev EKS cluster. For ease of use and agility, we chose to call these environments after colors, so people could say stuff like "I'm deploying to Pink! any objections?" or "Check out the cool Indigo feature I created."

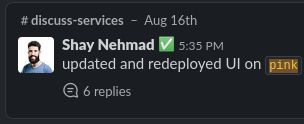

Where the **** Did YELLOW Go?

About a month after we implemented the ALB change, our VP R&D Shay Nehmad attempted to log in to the environment assigned to him - YELLOW - and was taken aback: he received a 502 error!

A quick slice in DataDog for “502” and “yellow” revealed that he was actually getting these errors from VOID - our fake catch-all-domain.

But why was YELLOW the only banned environment? Yep, Y is indeed one of the final letters in the English alphabet, following V, and the default load balancing policy of ALB is lexical, as revealed by a review of the documentation.

CAN WE FIX IT? YES, WE CAN!

Shay was able to continue working after manually reordering the rules, and a backlog ticket was created to permanently resolve the issue (we work fast to deliver and then idiomatically to maintain).

We discovered two viable options after some research: using weights to control the load balancing policy or changing the names of the environments so that VOID - our fake catch-all-domain – is last on the list.

"WEIGHING" OUR OPTIONS

Weights: With a number between 1 and 1000, the alb.ingress.kubernetes.io/group.order annotation controls the weight assigned to an ingress. The lower the number, the higher the rule.

Names: Originally we didn't want to break anything by using "null," so we went with Void, but zvoid, zzz, and other options seemed like poor alternatives. Furthermore, they weren't entirely future-proof either, because what if a new customer named zzzzork came along?

Having a minor impact (only on the YELLOW environment) and easily corrected manually, the weight solution was added to the next sprint, and eight days after initially discovering the issue, the change was applied on our dev cluster, and later that week rolled out to production.

Conclusion: RTFM.